What is Retrieval-Augmented Generation (RAG)?

RAG is a framework that enhances large language models (LLMs) by connecting them to live data sources. Instead of relying solely on static training data, RAG systems retrieve relevant, up-to-date information at the time of the query — producing grounded, factual, and context-aware responses.

At Search.co, we help you implement, scale, and optimize RAG systems using vector databases, embeddings, high-speed proxies, and structured retrieval workflows.

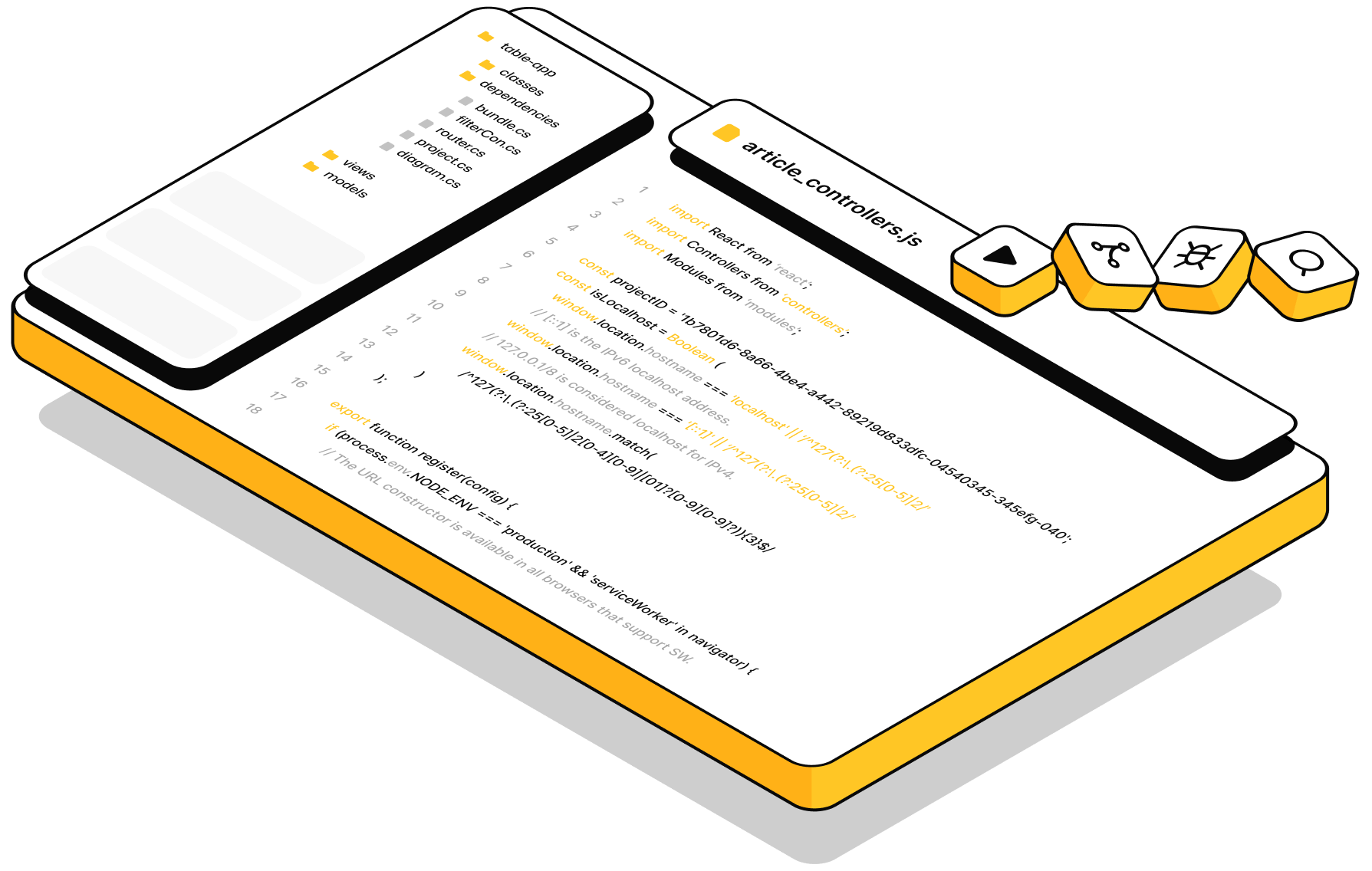

Tech Stack We Support

Use RAG For:

Why Choose Search.co for AI/RAG?

How It Works

01. Embed Your Documents

Convert unstructured text into vector embeddings stored in a vector DB.

02. Add Real-Time Retrieval

Fetch relevant results from your database or external sources via proxies or APIs.

03. RAG Pipeline Inference

Pass the retrieved data to an LLM for grounded, intelligent response generation.

04. Deploy & Iterate

Test, improve, and scale your RAG system with our support.

News & articles

Lorem ipsum dolor sit amet consectetur. Vitae augue id eleifend vitae at urna nisi commodo eu. Ultricies hendrerit sed nisl dolor ac at vitae in rutrum ut risus.

Frequently Asked Questions

Frequently asked questions for enterprise search

What is Search.co?

Search.co is a unified platform for data extraction and ingestion. We provide high-performance proxy networks to collect data from anywhere on the web, and real-time AI-native pipelines to transform that data into actionable insights using SQL and LLM-powered logic.

What is Search.co for?

Search.co is built for developers, data teams, growth marketers, AI researchers, and businesses that need structured, real-time data from external sources—without building and maintaining complex scraping or ingestion stacks.

What types of proxies do you offer?

We support a full range of proxies including residential, datacenter (IPv4 & IPv6), mobile (static & rotating), SOCKS5, and unlimited bandwidth proxies.

Can I rotate proxies automatically?

Yes. You can configure automatic rotation logic based on time, session, or custom rules to avoid IP bans and CAPTCHAs.

What is the difference between residential, datacenter, and mobile proxies?

Residential Proxies use real devices with ISP-assigned IPs. Ideal for stealth scraping.

Datacenter Proxies are faster and more cost-efficient but easier to detect.

Mobile Proxies offer maximum trust for mobile-app scraping or anti-fraud use cases.

What is the ingestion engine built on?

Our ingestion engine uses a SQL-first approach, built with Apache Flink, GraphQL, and DataSQRL under the hood. You define transformations in SQL or the SQRL language; we handle scaling, streaming, and deployment.

What formats and protocols are supported for ingestion?

We support Kafka, REST, Parquet, GraphQL, JDBC, flat files, and streaming event logs. You can also ingest directly from our proxy-extracted data streams.

Can I use LLMs in my pipeline?

Yes. Our architecture supports Retrieval-Augmented Generation (RAG), agentic workflows, and transformation agents using custom or embedded LLMs.

What programming languages are supported?

You can connect Search.co to your stack via Python, Node.js, Go, .NET, Ruby, and more. We offer client libraries and REST/GraphQL APIs.

Is this suitable for real-time data?

Absolutely. The pipeline is built for both batch and real-time processing with millisecond-latency for dashboards, alerts, or APIs.

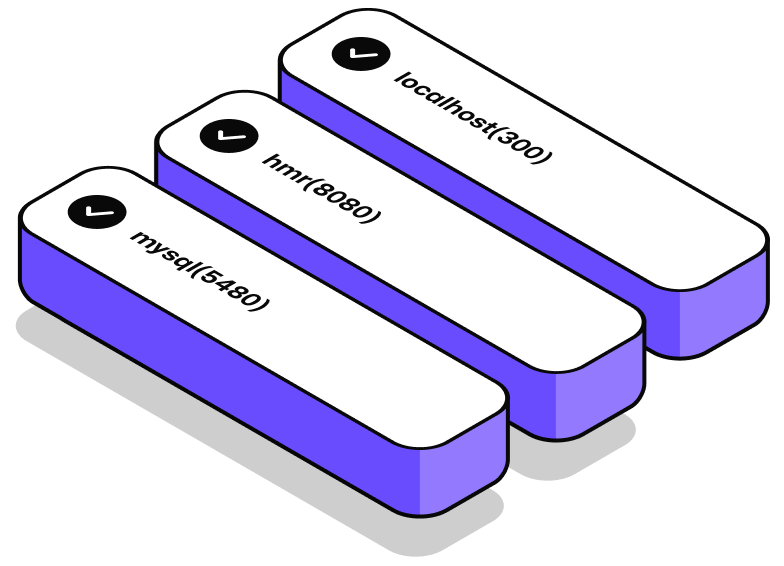

Can I deploy on my own infrastructure?

Yes. The ingestion engine is containerized and deployable on Kubernetes or Docker. For proxy routing, we handle the IP infrastructure on our end.

Is there bandwidth or request throttling?

No. We offer plans with unlimited bandwidth and support high-throughput scraping across geographies and endpoints.

Does Search.co integrate with BI tools?

Yes. You can pipe clean data into Looker, Tableau, Power BI, or any SQL-based BI tool via JDBC or GraphQL.

Can I use Search.co for SEO tracking and SERP scraping?

Yes. You can monitor rankings, ads, featured snippets, and competitor content at scale using rotating residential or mobile proxies.

Is this good for brand monitoring or product scraping?

Yes. You can monitor counterfeit listings, reseller pricing, product reviews, and inventory across platforms—completely anonymously.

Does Search.co support RAG and retrieval for AI agents?

Yes. You can combine vector search with live ingestion to power agentic AI, personalized recommendations, and context-aware chat.

What industries do you serve?

We work with companies across SaaS, fintech, healthcare, e-commerce, legal, and media—basically any org that needs external data in real time.

Is the platform secure and compliant?

Yes. We follow best practices in data encryption, access control, and logging. Ingestion pipelines are deployable to HIPAA-, SOC 2-, or GDPR-compliant environments.

Will proxies leak my identity or IP?

No. All proxies are fully anonymized and support rotating headers, user agents, and advanced fingerprinting resistance.

.png)